Intraobserver Reliability on Classifying Bursitis on Shoulder Ultrasound - Tyler M. Grey, Euan Stubbs, Naveen Parasu, 2023

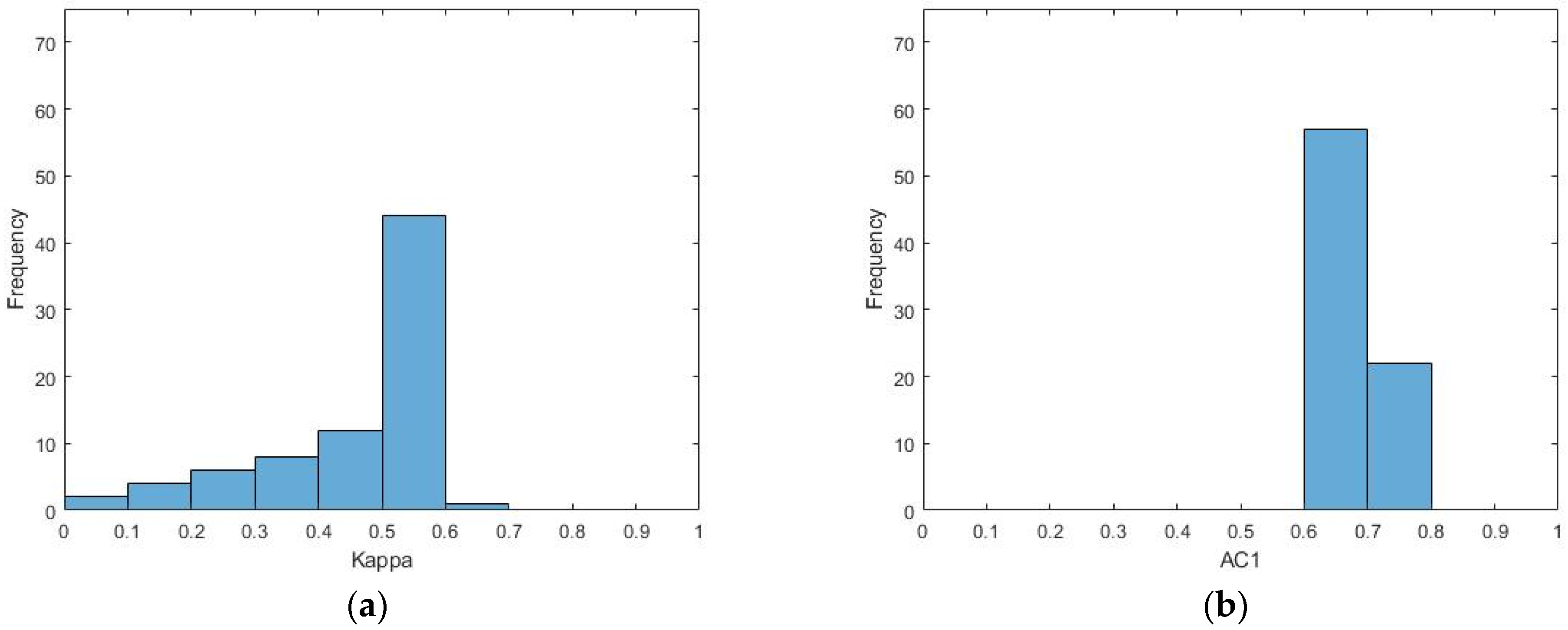

Symmetry | Free Full-Text | An Empirical Comparative Assessment of Inter-Rater Agreement of Binary Outcomes and Multiple Raters

Average Inter-and Inter-Observer with Kappa and Percentage of Agreement... | Download Scientific Diagram

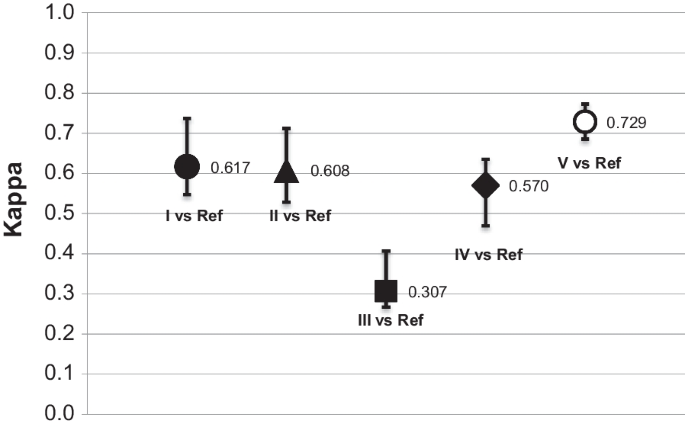

Figure . Level of intraobserver agreement according to Kappa statistic... | Download Scientific Diagram

Inter-Annotator Agreement (IAA). Pair-wise Cohen kappa and group Fleiss'… | by Louis de Bruijn | Towards Data Science

Inter- and intra-observer agreement in the assessment of the cervical transformation zone (TZ) by visual inspection with acetic acid (VIA) and its implications for a screen and treat approach: a reliability study

Interobserver and intraobserver agreement of three-dimensionally printed models for the classification of proximal humeral fractures - JSES International

Intra and Interobserver Reliability and Agreement of Semiquantitative Vertebral Fracture Assessment on Chest Computed Tomography | PLOS ONE

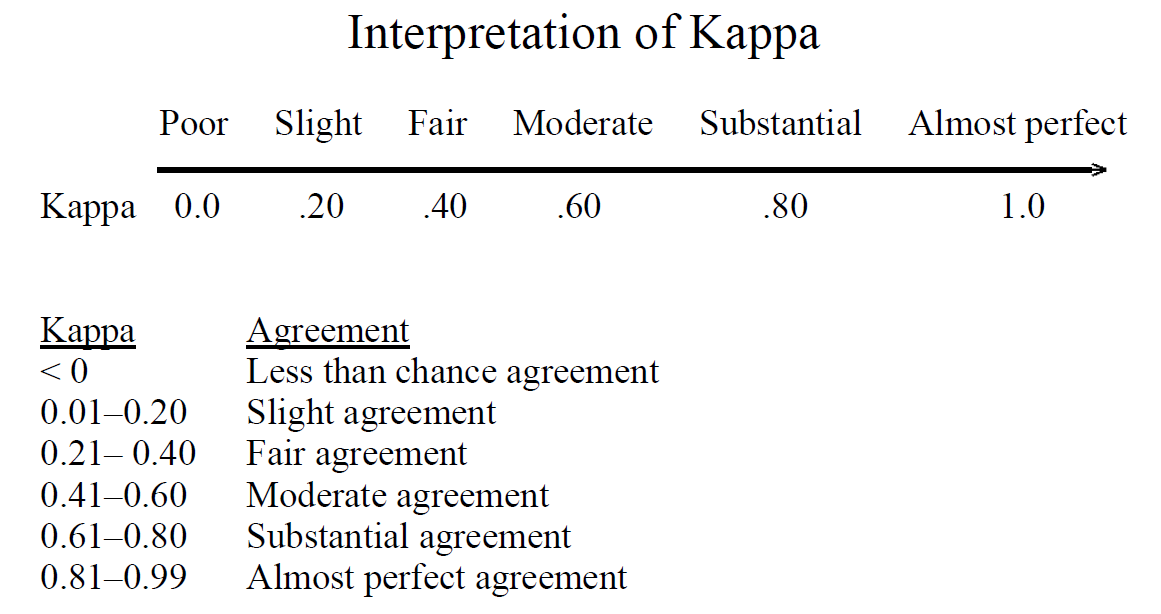

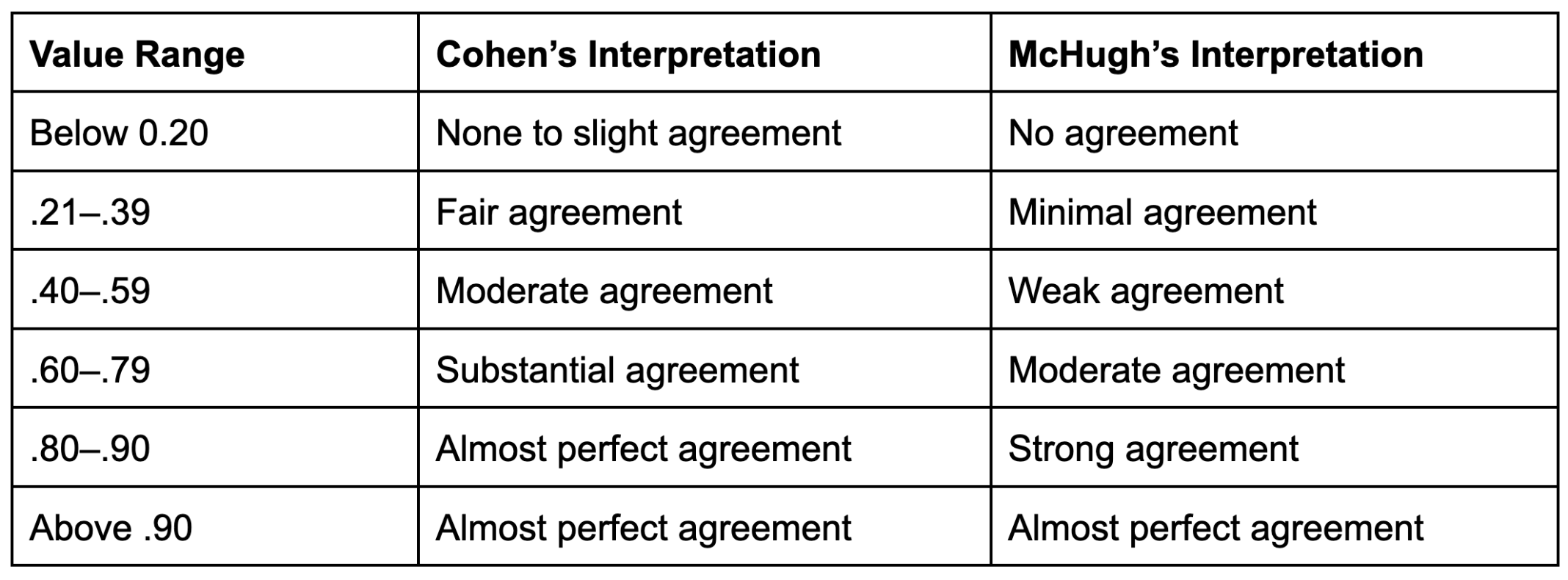

![PDF] Understanding interobserver agreement: the kappa statistic. | Semantic Scholar PDF] Understanding interobserver agreement: the kappa statistic. | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/e45dcfc0a65096bdc5b19d00e4243df089b19579/2-Table1-1.png)

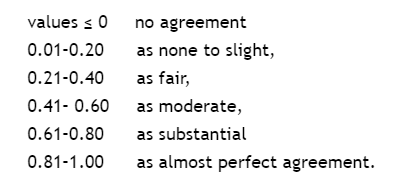

![PDF] Understanding interobserver agreement: the kappa statistic. | Semantic Scholar PDF] Understanding interobserver agreement: the kappa statistic. | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/e45dcfc0a65096bdc5b19d00e4243df089b19579/3-Table3-1.png)